이사 가기 전에 가구를 배치해 보자.

https://sourceforge.net/projects/sweethome3d/

3D로 직접 꾸며 볼 수 있고 저장도 가능하다. 강력추천^^

2.

http://www.homestyler.com/designer

3D로 직접 꾸며 볼 수 있고 저장도 가능하다. 강력추천^^

3.

http://www.vrmath.co.kr

특정 아파트 구조이기는 해도 괜찮은 유틸이 아닐까 싶다.

조금 더 다듬으면 좋은 프로그램이 될 것 같은데...

4. Etc.

학생용 Autodesk 프로그램 다운받기

http://students.autodesk.com/

http://download.autodesk.com/media/adn/AdskCompTech.html

다이알방식의 캐비닛(캐비넷) 여는법

cabinet (cabination dial)

다이알 중앙 위쪽(12방향)을 중심으로

오른쪽 3번 - 왼쪽 2번 - 다시 오른쪽 1번 다이알을 돌리면 됩니다.

예를들어 비밀번호가 21-73-40이라면

1. 21의 눈금을 오른쪽으로 3번이상 돌립니다(돌리다보면 3번 맞추기 힘드니까).

2. 73의 눈금을 왼쪽으로 2번 돌립니다(약간 딸각 걸리는 소리가 남).

3. 다시 40의 눈금을 오른쪽으로 한번만 돌립니다(약간 딸각 걸리는 소리가 남).

H/W - myLG070 공유기 문제

myLG070을 며칠간 사용을 했다. 소프트웨어가 오늘 또 버전이 바뀌었다.

서비스 초창기라 그런지, 불통율이 너무 높고, 불안하다.

하지만 , LG데이콤 070이 3가지 지적하는 것만 고치면 , 성공 가능성도 있다.

일단 핸드셋 전화기의 문제.

1. 무선인터넷 11g 기반이므로 SSID를 사용한다. 당연히.

그런데 , 핸드셋 무선랜 설정에 가보면, 설정이란 말이 무색하게 ,

첫번째로 무조건 myLG070 이란 SSID가 떡 하니 버티고 있고,

초기접속을 시도할 우선순위를 조절할 수도 없다.

무조건 핸드셋 키면 myLG070이란 SSID를 찾아보게 해논 것이다.

매우 불합리하다.

그러나 전용 AP를 사용하게 되면 , myLGNet이란 기본 컴퓨터통신용 SSID가 있고 ,

그 외에 전화기용으로 사용하는 것 같은 숨겨진 SSID가 하나 더 있다.

아마 이 숨겨진 SSID의 이름이 myLG070이 아닐까 추측해보며, 이를 통하여 전화기는 자동으로 최우선적으로 연결되는 것 같다.

당연한 말이겠지만, 전용 AP만 사용하는 경우엔 전화기를 껐다 켰을 때 SSID를 잘못 연결하여 전화가 안걸리는 경우가 거의 없다.

====================================================================================

==> 앵님께서 그 우선순위를 변경할 수 있는 우회방법을 알려주셨다. (감사합니다. 그런데 혹시 LG직원분? 이건 어떻게?)

그러나 , 직접 해보니 , 통화음만 뚜~ 하고 한번 울리고는 응답이 없더이다...

서비스코드가 바뀌었거나 , 유출되서 막았거나 한거 같습니다.

1. 070전화기를 들고 *25533# 라고 누르고

2. (통화버튼)을 누른다. 그러면 아래와 같이 [시스템 설정] 화면이 나온다.

-- 1. 핑테스트

2. myLG070 프로파일

3. 2번 myLG070 프로파일을 선택하여 들어간다.

4. 들어가면 1.비활성화 2.활성화 둘중 택일 할 수 있다.

여기서 1번 비활성화를 선택하면 기본설정인 myLG070 프로파일이 비활성화가 되어 선택이 안되므로,

사용자가 사용하는 무선공유기의 SSID를 최우선으로 선택할 수 있는 길이 열린다.

5. 다시 기본 프로파일을 사용하려면 똑같은 방법으로 들어가 활성화 시키면 된다.

====================================================================================

2. myLG070 전용 AP(유무선 공유기)의 문제점 : 사용자수 30만명이 넘었다는 콜센터 직원의 말은 들었다.

그러나 아직은 인터넷 전화라는게 나이드신 분들이 쉽게 설치해 쓰는게 아니라,

인터넷을 쉽게 생각하거나 , 또는 공유기좀 만져봤다 하는 사람들이 신청해서 주 사용층을 이룬다.

그럼, 그 사람들이 무선 공유기 하나쯤은 있으리란 생각을 LG데이콤은 좀 해봤어야 하지 않을까?

나도 역시 전용AP보다 성능상 더 좋고, 편한 MiMo 무선 공유기를 사용중이다.

앞으론 11n 방식의 공유기도 쓰게 될 것이다.

그렇다면 , LG데이콤은 과감하게 저급한 성능의 전용AP 사용자가 아닌 사람을 위한 배려로

기존 사용자의 AP에서 사용하는 SSID를 최우선으로 올릴 수 있게 오픈 해줘야,

핸드셋을 껏다 켰을 때 발생하는 통신접속 불량율을 줄일 수 있을 것이다.

3. 문제점 2 : 위와 같은 연유로 인하여 세상에 널리 뿌려져있는 11g 이상의 무선공유기들을 myLG070이 포용하려는 정책을

펴게 되면 전용 AP를 늘 최신판으로 개발해야 하는 기업의 부담을 없앨 수 있기 때문이다.

** 최신판 AP란? = 이제는 최소 MiMo 11n draft2.0쯤은 되야.....

*** myLG070 핸드셋 사용 팁 하나.

좀 귀찮긴 하지만 , 핸드셋이 IP를 받는 방식을 DHCP(자동할당)방식에서 지정IP 방식으로 바꾸면 재부팅시나,

껐다 켰을 때 접속 불량(전화그림에 X자표시)이 좀 줄어드는 것 같습니다. 느낌상인가?

또한 암호화 방식을 최소설정이 WEP 64bit 방식으로 하면 동일조건에서 안테나 더 많이 뜨더군요.

사용시 참고하시기 바랍니다.

그러나 전용 AP를 사용할 경우엔 기본 SSID 접속 보안방식이나 IP등 기타 어떤 내용도 수정이 불가합니다.

어쩌면 서비스 사업자 입장에선 그것이 더 효율적이기 때문이겠지요.

*** 2008-03-26 오늘자 lg070 폰 정보

s/w 버전 : WPN480H-1.1.56

*** 2008-05-22 오늘자 lg070 폰 정보

s/w 버전 : WPN480H-1.1.64 (뭐가 좋아진걸까?)[출처] myLG 070 , myLGNet 사업 대박, 전화기에서 공유기 고치면 서비스 성공한다. 통화불량 개선점. 잘 걸리게 하는법.|작성자 베이더경

apa-2000 펌웨워

apa-3000 펌웨어(에이엘테크 제조)

- 그러나 펌웨어 없네..뭐냐

<무선공유기 비밀번호 초기화 방법>

공유기 뒷면의 RESET 버튼을 이쑤시게등으로 4~10초를 누른다.

그러면 LED등이 깜박이면서 재시작됩니다. 깜빡이지 않았다면 더 길게 눌러줘야 한다.

기본네트워크 키값이 123456789a 로 변경된다.

공유기접속비밀번호가 admin로 변경된다.

증상: 메모리에 있는 파일을 하드디스크에 복사하려고 하면 복사진행중 복사할 수 없다고 메시지 출력

처리: CHKDSK G: /R

결과: 파일복사 정상 진행되었고, 혹시나해서 모든파일 백업받고 포맷함.

아이팟 셔플 고장 났을 때 할 수 있는 방법

인공호흡에 성공^^

1. 리셋 유틸 다운로드 받아서 사용하기

http://support.apple.com/kb/DL69

http://support.apple.com/downloads#ipod

2. 기타 방법

T61 XP 다운그레이드시 알수없는 장치 목록

>네트워크 컨트롤러 - 무선랜카드

>비디오 컨트롤러(VGA호환) - 그래픽카드

>알 수 없는 장치 - TPM

>알 수 없는 장치 - acpi power management(ThinkPad PM Device)

>Biometric Compressor - Finger printer

>PCI 메모리 컨트롤러 - 터보 메모리

>PCI Device - IMSM

>SM 버스 컨트롤러 - intel chipset driver

>USB 컨트롤러 - intel chipset driver

>USB 컨트롤러 - intel chipset driver

그 외 설치할 것

ThinkPad Hotkey

http://www.ultrareach.com/

국내에서 막아놓은 사이트 접속하는데 유용한 유틸

간편하고 좋음

압축풀고 바로 실행(설치파일 아님)

유해사이트로 차단하고 있는 사이트를

필터링 우회 프로그램과 DNS 서버를 사용해서 접속하기

DNS 서버

메인 208.67.222.222

보조 208.67.220.220

Internet Explorer 6 SP1 오프라인 설치 파일 다운로드 받기

Internet Explorer 6 SP1은 Internet Explorer 6 을 포함하고 있다.

운영체제 전체 선택시 50MB, Windows 2000 & XP 용만을 선택했을 경우 11.3MB

설치 구성은 다음과 같이 4가지가 설치된다.

Internet Explorer 6 Web Browser

Outlook Express

Windows Media Player

Windows Scripting Support

1. ie6setup.exe (474KB) 다운

- 한글 Internet Explorer 6 SP1 다운 받기

http://download.microsoft.com/download/ie6sp1/finrel/6_sp1/W98NT42KMeXP/KO/ie6setup.exe

- 영문 Internet Explorer 6 SP1 다운 받기

http://download.microsoft.com/download/ie6sp1/finrel/6_sp1/W98NT42KMeXP/EN-US/ie6setup.exe

2. 다운로드

다운로드 받은 ie6setup.exe 파일이 C:\ie6 에 위치하고 있다고 가정하고 다음과 같은 명령을 "실행창"이나 "명령프롬프트 창"에서 실행시킨다.

"C:\ie6\ie6setup.exe" /c:"ie6wzd.exe /d /s:""#E"(인용부호["]도 포함한다.)

3. 라이센스 동의 : Accept

4. 설치 파일 저장위치 변경 : C:\down\

5. 운영체제 선택 : Windows 2000 & XP

6. 다운로드 확인

윈도우즈 XP에서 익스플로러에 문제가 생기면 대부분 최신 버전으로 업데이트 하거나 다시 설치해야 한다.

그러나 이미 최신 버전으로 설치되어 있으면 \'이 시스템에 새버전의 Internet Explorer가 이미 설치되어 있습니다. 설치를 계속할 수 없습니다.\'라는 메시지가 나오면서 설치를 진행 할 수가 없다.

아래 방법으로 internet explore 를 재설치 할 수 있다.

1. 복원 시점을 만든다.

(시작 - 보조프로그램 - 시스템 도구 - 시스템 복원 을 이용하여 복원 시점을 만든 후 진행한다.)

2. microsoft 홈페이지에서 ie6을 다운로드 해 놓고, 실행은 하지 않는다.

http://www.microsoft.com/downloads/details.aspx?displaylang=ko&FamilyID=1E1550CB-5E5D-48F5-B02B-20B602228DE6

3. 시작 - 실행 - regedit입력 - 확인

Hkey_Local_Machine\\software\\microsoft\\active setup\\lnstalled components\\{89820200-ECBD-11CF-8B85-00AA005B4383}

{89820200-ECBD-11CF-8B85-00AA005B4383}이 선택된 상태에서 메뉴중에 파일 - 내보내기를 이용하여 백업을 해 놓는다.

4. 위 키값을 백업했다면 {89820200-ECBD-11CF-8B85-00AA005B4383}폴더를 삭제한다.

삭제를 하지 않고 진행하려면 {89820200-ECBD-11cf-8B85-00AA005B4383}폴더의 오른쪽 항목에서 Isinstalled(REG_DWORD)를 더블 클릭 값을 1 -> 0으로 바꾼다.

5. 삭제 후 열린 창을 닫고 ie6 다운로드 해 놓은 것을 설치한다.

6. 설치 후 재부팅해서 확인한다.

만약 설치 중에 동일 메시지를 출력하거나 로고테스트를 통과하지 않았다고 나온다면 아래 방법으로 다시 진행한다.

7. Internet Explorer6을 복구 재설치한다.

7-1. 시작-실행에서 "rundll32.exe setupapi,InstallHinfSection DefaultInstall 132 %windir%\\inf\\ie.inf" (" "제외)입력하고 확인을 클릭한다.

설치 CD 를 넣으라는 메시지가 나 올 수 있다. 이경우 XP 설치 CD 를 넣어 i386 폴더까지 지정해 준다.

7-2. 복구 설치가 완료되면 컴퓨터를 재부팅한다.

설치 CD 가 없는 경우에는 8번부터 진행한다.

8. 실행중인 안티바이러스 프로그램의 서비스를 중지시키고 Cryptographic 서비스를 재시작 한다.

8-1. 제어판의 관리도구에서 서비스를 더블클릭

8-2. 서비스중 Antivirus관련 서비스를 선택하고 중지

8-3. 서비스중 Microsoft Cryptographic 서비스를 선택하고 재시작

9. 아래 dll을 재등록 한다.

9-1. 시작-실행에서 아래 명령어를 수행한다.

regsvr32 urlmon.dll

regsvr32 softpub.dll

regsvr32 wintrust.dll

regsvr32 initpki.dll

regsvr32 dssenh.dll

regsvr32 rsaenh.dll

regsvr32 gpkcsp.dll

regsvr32 sccbase.dll

regsvr32 slbcsp.dll

regsvr32 cryptdlg.dll

(등록 실패로 나오는 파일은 무시하고 다음을 진행한다)

10. 시스템을 재시작하고 다운로드 받아 놓은 Internet Explorer 6.0 sp1을 재설치한다.

IE설치 확인

IE가 성공적으로 설치되었는지 확인하려면

C:Windows폴더의 \'Active Setup Log.txt\' 파일을 클릭해 본다.

그리고 \'failed\'란 단어가 없다면 안정적으로 설치되었다고 믿어도 된다.

ㅇ putty 설정값 백업

http://kldp.org/node/42609 에서 인용

regedit을 실행하신 다음에

내 컴퓨터\HKEY_CURRENT_USER\Softeare\Simon Tatham\PuTTY 에 있습니다.

호스트키까지 똥째로 저장하시려면 이 뿌띠 키(디렉토리처럼 생긴거)를 통째로 저장하거나 아니면 이아래에 있는 Session키를 저장하시면 됩니다. (한번 저장해놓고 여러 컴퓨터에서 활용하시려면 Session 만 저장하세요)

왼쪽트리에서 키를 선택하고 나서 메뉴에서 레지스트리->레지스트리 파일 내보내기->선택한 분기 에서 "PuTTYHosts.reg" 처럼 원하시는 이름으로 저장하세요.

이 파일을 더블클릭하시면 설정값들을 다시 입력하실수 있습니다. 자신이 쓰는 깨끗하게 호스트들을 깨끗하게 정리해서 만들어놓고 웹에 올려놓으면, 어디서든지 뿌띠하고 이 파일을만 받아서(뿌띠도 설치할 필요 없으니) 바로 사용할수 있어서 편합니다.

ㅇ putty private key 파일을 openssh 키파일로 변환할려면

http://kldp.org/node/74183 참고

public key는 Putty Key Generator의 상단에 보면 복사할수 있고요,

private key는 메뉴에서 Conversions->"Export OpenSSH key"로 pem 파일로 저장할수 있습니다.

public key는 authorized_keys 파일에 추가하고, id_rsa.pub나 id_dsa.pub 등으로 저장하면 되고,

private key는 id_rsa나 id_dsa로 저장하면 됩니다.

ㅇ openssh 에서 만든 private 키를 putty로 옮기기

키를 윈도우로 가지고와서 프로그램에서 Conversions -> Import key 를 이용하면 된다.

여기서 주의할점. 아래와 같이 KEY 내용이 끝나고 엔터키가 있어야한다.

-----BEGIN RSA PRIVATE KEY-----

MIICWgIBAAKBgQCvxDhf4cFa8vUSLi1EZDSYFyzChwZ41Tfdv92ma/sN8RTQ4rKJ

iK2xQSjvKUIA0RC/ieWy70PfPvSNtsJIXw2xyGJ1YtvvY4Gjy+KYj+kwkMzXR0yU

+/UJF75G5k1M5BMV4BgLyr+5BkmFv/Vub8To6OTLeLp4AGL6QOKKm3vNiQIBIwKB

..... 중략

-----END RSA PRIVATE KEY-----

-->> 여기 빈칸 하나!!!

http://www.dd-wrt.com/wiki/index.php/WRT54G_v4_Installation_Tutorial

이참에 펌웨어 업그레이드나 하려고 하다가 얻은 자료입니다.

덕분에 파일 끌어올때 멈춤현상도 없어지고... (오늘 설치해서 잘 모르겠지만) 나름대로 빨라졌다고 생각합니다.

펌웨어 업그레이드의 중요한 점!!

용량 제한때문에 mini 로 업데이트 한다음에 정식버전으로 갈아타야 한다는거죠..

WRT버전도 있긴 한데.... 처음에 멋모르고 그걸로 업그레이드 했다가 낭패....

간신히 살려서 generic 버전으로 바꿨습니다.

WRT54G v4 사용자분은 꼭 Gerneric 버전으로 하세요.....

mini :

dd-wrt.v23_mini_generic.bin

generic :

dd-wrt.v23_generic.bin

기본 ID와 Passwrod는 root / admin 입니다. 부디 긴장하지 마시길......

이건 제 백업용 설정파일(방화벽OFF, 암호 바뀐 파일)

setting.bin

공유기 설정화면(웹접속)이 안되는 문제가 생기면...

공유기 뒤쪽에 Reset 버튼을 Power LED가 깜빡거릴 때까지 꾹 누르고 있으면 Factory Default 로 초기화 됩니다.

WRT54G v4 Installation Tutorial

From DD-WRT Wiki

The following are the steps that I went through to get DD-WRT v23 installed on a WRT54G v4. I tried to take screenshots of each step as I went, so as not to confuse anyone. Following the instructions found at Steps_to_flash_through_Web_Interface, I was eventually successful in getting DD-WRT installed. I will use some of the text from those instructions as well as inserting my screenshots and other encounters not mentioned in the above link.

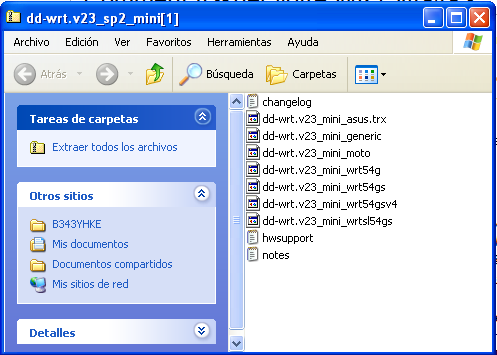

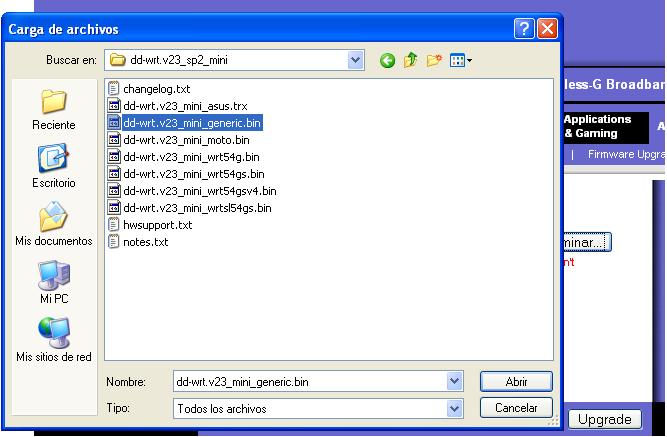

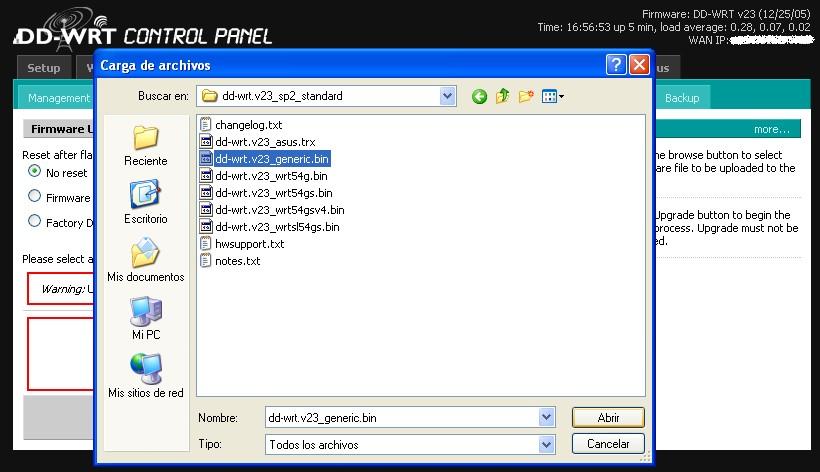

1) Download the DD-WRT v23 firmware

- You must use the mini version when upgrading from original linksys firmware!

- Download the Mini Version 23 HERE (dd-wrt.v23_sp2_mini.zip ) or go to the DD-WRT downloads section at http://www.dd-wrt.com/dd-wrtv3/dd-wrt/downloads.html

- Extract the archive. Once it is downloaded and extracted from the zip archive it should look something like this:

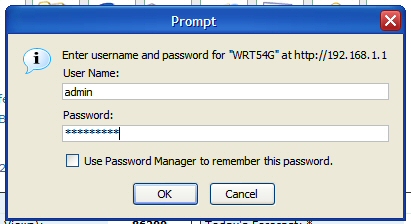

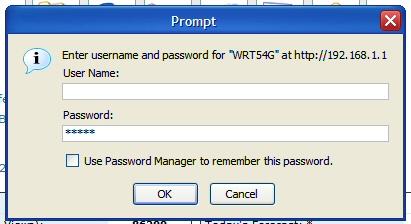

2a) Reset through the Web Interface

if you forgot the password or IP of your router, proceed to step 2b.

- From a PC connected to one of the 4 LAN ports on the router open a web browser and go to the IP of the router (default IP is 192.168.1.1).

- You will be prompted for username and password. Username is not required. Enter password (default password is admin) and you should be at the Web Interface of your WRT54G v4

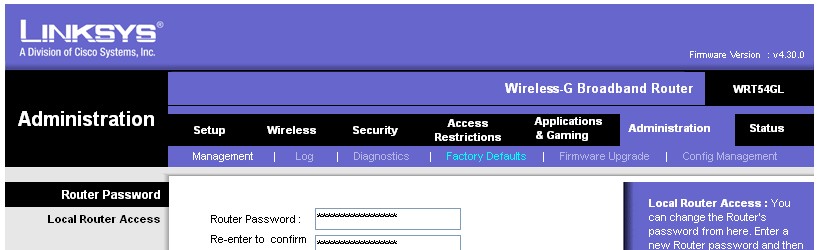

- Click the "Administration" tab.

- Click the "Factory Defaults" sub-tab.

- Select "Yes".

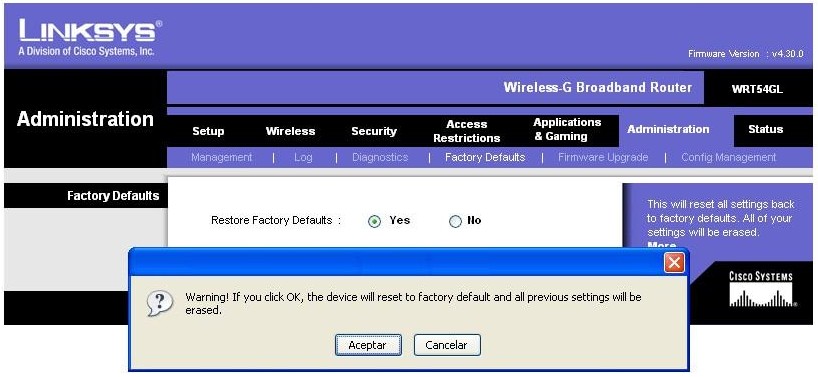

- Click the "Save Settings" button.

- A warning will pop up, click "ok".

2b) Reset to factory defaults

- Press and hold the reset button on the back of the router for 30 seconds. This will clear your NVRAM, the configuration and reset the password to admin.

Be aware that if your router currently has an OpenWRT firmware running on it, then using the reset button may brick your router. Research your current firmware to be safe.

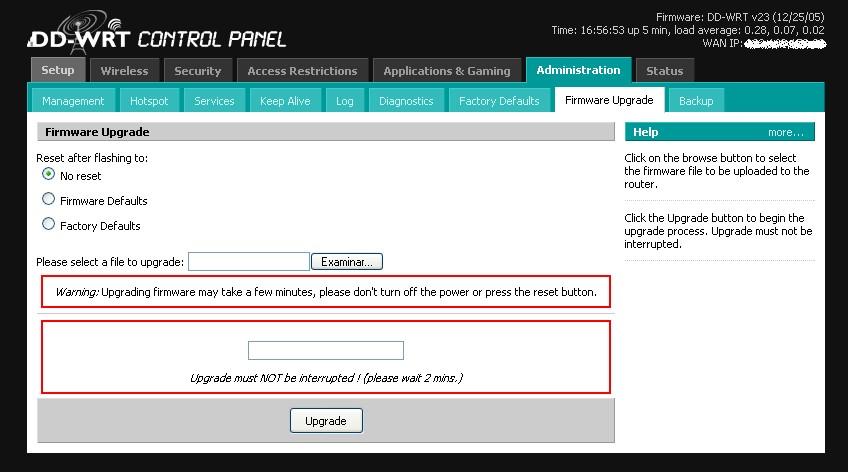

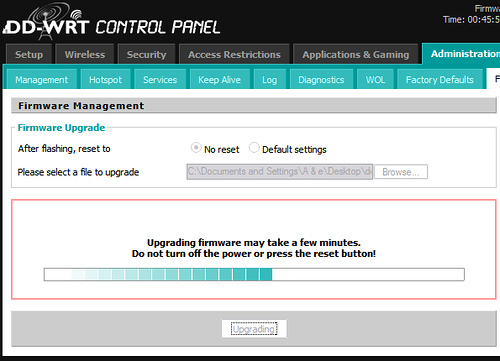

3) Upgrade Firmware

- From a PC connected to one of the 4 LAN ports on the router open a web browser and go to the IP 192.168.1.1.

- You will be prompted for username and password. Leave username blank, enter password admin. Now you should be at the Web Interface of your WRT54G v4.

- Click the "Administration" tab

- Click the "Firmware Upgrade" sub-tab.

- Click the "Browse" button and select "dd-wrt.v23_mini_generic.bin" file you extracted in step 1.

- Click the "Upgrade" button.

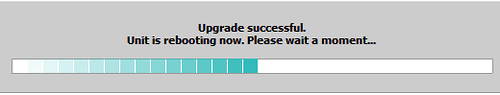

- The router will take a few minutes to upload the file, flash the firmware, and then reset.

- Here is where my experience was a bit different than the instructions said it would be. I never got success message, instead of got a failed message. This is when I started to get a bit nervous that I had bricked my brand new router! So I started reading furiously through all the documentation I could get my hands on about recovering a bricked WRT when I relaunched "192.168.1.1" and found that DD-WRT was working! Don't ask me... but the flashed seemed to have worked perfectly despite the error message I got.

http://angrycamel.com/howtos/dd-wrt/images/DD-WRT_WRT54G_v4_-StockUpgradeFailed.png

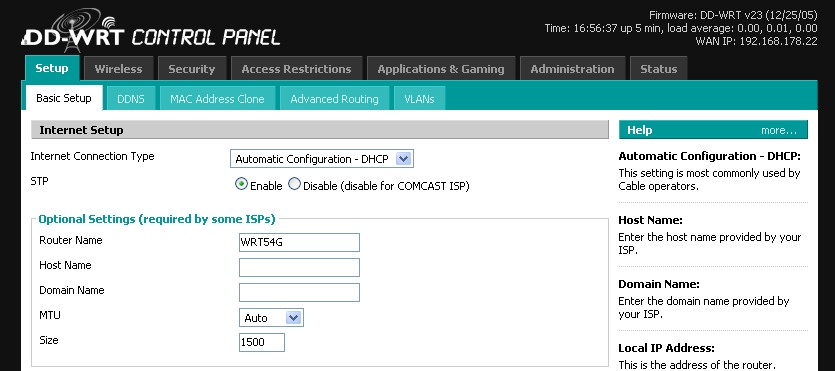

- Additional note #1: as of version v23 SP1 the default login username/password has changed from <blank>/admin to root/admin.

- Additional note #2: if you are flashing the router using Firefox, it may warn you a dozen times that some scripts are very slow, giving you the option to stop that script, or continue. You should press continue (or use alternate browser). To fix the unresponsive script issue in Firefox, navigate to

about:config, then increase the integer value ofdom.max_script_run_timefrom5to20. - Additional note #3: make sure you are plugged in directly to the router with a network cable. Going through a switch or hub doesn't count (and believe it or not it doesn't work...I spent several hours following these instructions going through a switch with failure after failure. Please plug in directly to the router!).

- If flashed successfully you will now be at the DD-WRT web interface and the Router Name will be DD-WRT.

4) Reset to factory defaults AGAIN

- Repeat step 2 above. Note: step 2b (the manual way of clearing the NVRAM) is recommended to make sure the radio gets the correct parameters.

5) Upgrade the Firmware to DD-WRT STD v23

- It is strongly advised that you enable the "Boot Wait" option under the "Administration" tab before continueing. This will help you recover in the future should you flash your router improperly. If you use v23 or higher the "Boot Wait" option is enabled by default.

- Download the STD Version 23 HERE (dd-wrt.v23_sp2_standard.zip) or go to the DD-WRT downloads section at http://www.dd-wrt.com/dd-wrtv3/dd-wrt/downloads.html

- Extract it

- Click the "Administration" tab

- Click the "Firmware Upgrade" sub-tab.

- Hit the browse button and select dd-wrt.v23_generic.bin that you just extracted.

- You should see the progress of the flash like so:

- If the upgrade went well then you should see a success message. You're done!

6)Troubleshooting

- If your router fails to reboot (power light doesn't stop flashing, no web interface, etc) you will need to Recover from a Bad Flash.

* / 는 최상위 디렉터리를 뜻함. 만약 찾고자 하는 디렉터리가 있다면 그걸로 대체

- 파일 이름에 foobar 가 들어간 파일 찾기

find / -name "foobar" -print

- 특정 사용자(foobar) 소유의 파일을 찾기

find / -user foobar -print | more

- 최근 하루동안에 변경된 파일을 찾기

find / -ctime -1 -a -type f | xargs ls -l | more

- 오래된 파일(30일 이상 수정되지 않은 파일) 찾기

find / -mtime +30 -print | more

- 최근 30일안에 접근하지 않은 파일과 디렉터리를 별도의 파일로 만들기

find / ! ( -atime -30 -a ( -type d -o -type f ) ) | xargs ls -l > not_access.txt

- 하위 디렉터리로 내려가지 않고 현재 디렉터리에서만 검색하기

find . -prune ...

- 퍼미션이 777 인 파일 찾기

find / -perm 777 -print | xargs ls -l | more

- others 에게 쓰기(write) 권한이 있는 파일을 찾기

find / -perm -2 -print | xargs ls -l | more

- others 에게 쓰기(write) 권한이 있는 파일을 찾아 쓰기 권한을 없애기

find / -perm -2 -print | xargs chmod o-w

또는

find / -perm -2 -exec chmod o-w {} ; -print | xargs ls -l | more

- 사용자이름과 그룹이름이 없는 파일 찾기

find / ( -nouser -o -nogroup ) -print | more

- 빈 파일(크기가 0 인 파일) 찾기

find / -empty -print | more

또는

find / -size 0 -print | more

- 파일 크기가 100M 이상인 파일을 찾기

find / -size +102400k -print | xargs ls -hl

- 디렉터리만 찾기?

find . -type d ...

- root 권한으로 실행되는 파일 찾기

find / ( -user root -a -perm +4000 ) -print | xargs ls -l | more

- 다른 파일시스템은 검색하지 않기

find / -xdev ...

- 파일 이름에 공백이 들어간 파일 찾기

find / -name "* *" -print

- 숨겨진(hidden) 파일을 찾기

find / -name ".*" -print | more

- *.bak 파일을 찾아 지우기

find / -name "*.bak" -exec rm -rf {} ;

- *.bak 파일을 찾아 특정 디렉터리로 옮기기

mv `find . -name "*.bak"` /home/bak/

- 여러개의 파일에서 특정 문자열을 바꾸기

find / -name "*.txt" -exec perl -pi -e 's/찾을문자열/바꿀문자열/g' {} ;

from: http://www.raibledesigns.com/page/rd/20030312

I changed my shorcut icon (Win2K) to have the following as it's target:

eclipse.exe -vmargs -Xverify:none -XX:+UseParallelGC -XX:PermSize=20M -XX:MaxNewSize=32M -XX:NewSize=32M -Xmx256m -Xms256m

Eclipse now starts in a mere 6 seconds (2 GHz Dell, 512 MB RAM). Without these extra settings, it takes 11 seconds to start. That's what I call a performance increase! (2003-03-12 09:32:04.0)

from: http://www.raibledesigns.com/comment.do?method=edit&entryid=065039163189104748672473500018

I tried this out, but the memory settings don't seem to have anything to do with startup time.

18 seconds - "eclipse.exe"

13 seconds - "eclipse.exe -vmargs -Xverify:none"

12 seconds - "eclipse.exe -vmargs -Xverify:none -XX:+UseParallelGC -XX:PermSize=20M -XX:MaxNewSize=32M -XX:NewSize=32M -Xmx96m -Xms96m"

It's only the Xverify:none parameter which has a noticeable effect on reducing startup time. On the java website I found that this parameter turns off bytecode verification ( http://developer.java.sun.com/developer/onlineTraining/Security/Fundamentals/Security.html ), although the default is supposedly "only verify classes loaded over the network".

Survey of AJAX/JavaScript Libraries

Please note these libraries appear in alphabetical order. If you're adding one to this list, please add it in alphabetical order rather than sticking it at the top.

There is another excellent and comprehensive list of libraries at EDevil's Weblog.

AjaxAnywhere

http://ajaxanywhere.sourceforge.net

License: Apache 2

Description: AjaxAnywhere is a simple way to enhance an existing JSP/Struts/Spring/JSF application with AJAX. It uses AJAX to refresh "zones" on a web page, therefore AjaxAnywhere doesn't require changes to the underlying code, so while it's more coarse than finely-tuned AJAX, it's also easier to implement, and doesn't bind your application to AJAX (i.e., browsers that don't support AJAX can still work.). In contrast to other solutions, AjaxAnywhere is not component-oriented. You will not find here yet another AutoComplete? component. Simply separate your web page into multiple zones, and use AjaxAnywhere to refresh only those zones that needs to be updated.

Pros:

- Less JavaScript to develop and to maintain. Absence of commonly accepted naming convention, formatting rules, patterns makes JavaScript code messier then Java/JSP. It is extremely difficult to debug and unit-test it in multi-browser environment. Get rid of all those complexities by using AjaxAnywhere.

- Easy to integrate. AjaxAnywhere does not require changing the underlying application code.

- Graceful degradation and lower technical risk. Switch whenever you need between AJAX and traditional (refresh-all-page) behaviour of your web application. Your application can also support both behaviors.

- Free open source license.

Cons:

- AxajAnywhere? is not as dynamic as pure-JavaScript AJAX solutions. Despite that AjaxAnywhere will probably cover your basic needs, to achieve certain functionality you might need to code some JavaScript.

- Today, you can only update a set of complete DHTML objects without breaking then apart. For example, you can update a content of a table cell or the whole table, but not the last row, for example. In later versions, we plan to implement partial DHTML update, as well.

AjaxTags component of Java Web Parts

http://javawebparts.sourceforge.net

License: Apache 2

Description: AjaxTags was originally an extended version of the Struts HTML taglib, but was made into a generic taglib (i.e., not tied to Struts) and brought under the Java Web Parts library. AjaxTags is somewhat different from most other Ajax toolkits in that it provides a declarative approach to Ajax. You configure the Ajax events you want for a given page via XML config file, including what you want to happen when a request is sent and when a response is received. This takes the form of request and response handlers. A number of rather useful handlers are provided out-of-the-box, things like updating a <div>, sending a simple XML document, transforming returned XML via XSLT, etc. All that is required to make this work on a page is to add a single tag at the end, and then one following whatever page element you want to attach an Ajax event to. Any element on a page can have an Ajax event attached to it. If this sounds interesting, it is suggested you download the binary Java Web Parts distro and play with the sample app. This includes a number of excellent examples of what AjaxTags can do.

Pros:

- There is no Javascript coding required, unless you need or want to write a custom request/response handler, which is simply following a pattern.

- Very easy to add Ajax functions to an existing page without changing any existing code and without adding much beyond some tags. Great for retroactively adding Ajax to an app (but perfect for new development too!)

- Completely declarative approach to Ajax. If client-side coding is not your strong suite, you will probably love AjaxTags.

- Has most of the basic functions you would need out-of-the-box, with the flexibility and extensibility you might need down the road.

- Cross-browser support (IE 5.5+ and FF 1.0.7+ for sure, probably some older versions too).

- Is well-documented with a good example app available.

Cons:

- Because it's a taglib, it is Java-only.

- Doesn't provide pre-existing Ajax functions like many other libraries, things like Google Suggests and such. You will have to create them yourself (although AjaxTags will make it a piece of cake!). Note that there are some cookbook examples available that shows a type-ahead suggestions application, and a double-select population application. Check them out, see how simple they were to build!

- AjaxTags says absolutely nothing about what happens on the server, that is entirely up to you.

- Might be some slight confusion because there is another project named AjaxTags at SourceForge. The AjaxTags in Java Web Parts existed first though, and in any case they have very different focuses. Just remember, this AjaxTags is a part of Java Web Parts, the other is not.

Dojo

License: Academic Free License v 2.1.

Description: Dojo is an Open Source effort to create a UI toolkit that allows a larger number of web application authors to easily use the rich capabilities of modern browsers.

Pros:

- Dev roadmap encompasses a broad range of areas needed to do browser-based app development -- Even the 0.1 release includes implementations of GUI elements, AJAX-style communication with the server, and visual effects.

- Build system uses Ant, so core devs seem to be on friendly terms with Java.

- Browser compatibility targets are: degrade gracefully in all cases, IE 5.5+, Firefox 1.0+, latest Safari, latest Opera

- Most core parts of the system are unit tested (an unit tests are runnable at the command line)

- Includes package system and build system that let you pull in only what you need

- Comprehensive demo at http://archive.dojotoolkit.org/nightly/tests/

Cons:

- Documentation is lacking, but what's available is linked from http://dojotoolkit.org/docs .

DotNetRemoting Rich Web Client SDK for ASP.NET

License: - commercial license

Description: Rich Web Client SDK is a platform for developing rich internet applications (including AJAX). The product is available for .NET environment and includes server side DLL and a client side script

Pros:

- No need for custom method attributes, special signatures or argument types. Does not require stub or script generation.

- Available for .NET

- Automatically generates the objects from the existing .Net classes.

- Supports Hashtable and ArrayList? on the client

- The client and the server objects are interchangeable

- Can Invoke server methods methods from the client with classes as arguments

- Very easy to program

- Professional support

Cons:

- Lacks out of the box UI components.

DWR

http://www.getahead.ltd.uk/dwr/

License: Apache 2.0

Description: DWR (Direct Web Remoting) is easy AJAX for Java. It reduces development time and the likelihood of errors by providing commonly used functions and removing almost all of the repetitive code normally associated with highly interactive web sites.

Pros:

- Good integration with Java.

- Extensive documentation.

- Supports many browsers including FF/Moz, IE5.5+, Safari/Konq, Opera and IE with Active-X turned off via iframe fallback

- Integration with many Java OSS projects (Spring, Hibernate, JDOM, XOM, Dom4J)

- Automatically generated test pages to help diagnose problems

Cons:

- Java-exclusive -- seems to be Java code that generates JavaScript. This limits its utility in non-Java environments, and the potential reusability by the community.

JSON-RPC-JAVA

http://oss.metaparadigm.com/jsonrpc/index.html

License: JSON-RPC-Java is licensed under the LGPL which allows use within commerical/proprietary applications (with conditions).

Description: JSON-RPC-Java is a key piece of Java web application middleware that allows JavaScript DHTML web applications to call remote methods in a Java Application Server (remote scripting) without the need for page reloading (as is the case with the vast majority of current web applications). It enables a new breed of fast and highly dynamic enterprise Java web applications (using similar techniques to Gmail and Google Suggests).

Pros:

- Exceptionally easy to use and setup

- Transparently maps Java objects to JavaScript objects.

- Supports Internet Explorer, Mozilla, Firefox, Safari, Opera and Konqueror

Cons:

- JavaScript Object Notation can be difficult to read

- Possible scalability issues due to the use of HTTPSession

MochiKit

License: MIT or Academic Free License, v2.1.

Description: "MochiKit makes JavaScript suck less." MochiKit is a highly documented and well tested, suite of JavaScript libraries that] will help you get shit done, fast. We took all the good ideas we could find from our Python, Objective-C, etc. experience and adapted it to the crazy world of JavaScript.

Pros:

- Test-driven development -- "MochiKit has HUNDREDS of tests."

- Exhaustive documentation -- "You're unlikely to find any JavaScript code with better documentation than MochiKit. We make a point to maintain 100% documentation coverage for all of MochiKit at all times."

- Supports latest IE, Firefox, Safari browsers

Cons:

- Support for IE is limited to version 6. According to the lead dev on the project, "IE 5.5 might work with a little prodding."

- Sparse visual effects library

Plex Toolkit

http://www.plextk.org - see http://www.protea-systems.com (and view source!) for sample site

License: - LGPL or GPL (optional)

Description: Open source feature-complete DHTML GUI toolkit and AJAX framework based on a Javascript/DOM implementation of Macromedia's Flex technology. Uses the almost identical markup language to Flex embedded in ordinary HTML documents for describing the UI. Binding is done with Javascript.

Pros:

- Full set of widgets such as datagrid, tree, accordion, pulldown menus, DHTML window manager, viewstack and more

- Markup driven (makes it easy to visually build the interface)

- Interface components can be easily themed with CSS

- Client side XSLT for IE and Mozilla

- Well documented with examples.

- Multiple remoting transport options - XMLHttpRequest?, IFrame (RSLite cookie based coming soon)

- Back button support

- Support for YAML serialization

Cons:

- Lacks animation framework.

Prototype

License: MIT

Description: Prototype is a JavaScript framework that aims to ease development of dynamic web applications. Its development is driven heavily by the Ruby on Rails framework, but it can be used in any environment.

Pros:

- Fairly ubiquitous as a foundation for other toolkits -- used in both Scriptaculous and Rico (as well as in Ruby on Rails)

- Provides fairly low-level access to XMLHttpRequest

Cons:

- IE support is limited to IE6.

- No documentation other than the README: "Prototype is embarrassingly lacking in documentation. (The source code should be fairly easy to comprehend; I’m committed to using a clean style with meaningful identifiers. But I know that only goes so far.)" There's an unofficial reference page at http://www.sergiopereira.com/articles/prototype.js.html and some scattered pieces at the Scriptaculous Wiki.

- Has no visual effects library (but see Prototype)

- Has no built-in way of acessing AJAX result as an XML doc.

- Modifications to

Object.prototypeapparently cause issues with for/in loops in interating through arrays. I could not find any information to indicate that this problem has been fixed.

Rialto

http://rialto.application-servers.com/

License: Apache 2.0

Description: Rialto is a cross browser javascript widgets library. Because it is technology agnostic it can be encapsulated in JSP, JSF, .Net or PHP graphic components.

Pros:

- Technology Agnostic

- Finition of widgets

- Vision of the architecture

- Tab drag and drop

Cons:

- Scant documentation -- currently consists of two PDFs and a single Weblog entry.

- Supports IE6.x and Firefox 1.x.

- Documentation

Rico

License: Apache 2.0

Description: An open-source JavaScript library for creating rich Internet applications. Rico provides full Ajax support, drag and drop management, and a cinematic effects library.

Pros:

- Corporate sponsorship from Sabre Airline Solutions (Apache 2.0 License). This means that the code is driven by practical business use-cases, and user-tested in commercial applications.

- Extensive range of functions/behaviors.

Cons:

- Scant documentation -- currently consists of two PDFs and a single Weblog entry.

- Supports IE5.5 and higher only. Also, no Safari support. From the Web site: "Rico has been tested on IE 5.5, IE 6, Firefox 1.0x/Win Camino/Mac, Firefox 1.0x/Mac. Currently there is no Safari or Mac IE 5.2 support. Support will be provided in a future release for Safari."

- Has no built-in way of acessing AJAX result as an XML doc.

- Drag-and-drop apparently broken in IE (tested in IE6)

SAJAX

http://www.modernmethod.com/sajax/

License: BSD

Description: Sajax is an open source tool to make programming websites using the Ajax framework — also known as XMLHTTPRequest or remote scripting — as easy as possible. Sajax makes it easy to call PHP, Perl or Python functions from your webpages via JavaScript without performing a browser refresh. The toolkit does 99% of the work for you so you have no excuse to not use it.

Pros: Supports many common Web-development languages.

Cons:

- Seems limited just to AJAX -- i.e., XMLHttpRequest. No visual effects library or anything else.

- Integrates with backend language, and has no Java or JSP support.

Scriptaculous

License: MIT

Description: Script.aculo.us provides you with easy-to-use, compatible and, ultimately, totally cool! JavaScript libraries to make your web sites and web applications fly, Web 2.0 style. A lot of the more advanced Ajax support in Ruby on Rails (like visual effects, auto-completion, drag-and-drop and in-place-editing) uses this library.

Pros:

- Well-designed site actually has some reference materials attached to each section.

- Lots of neat visual effects.

- Simple and easy to understand object-oriented design.

- Although developed together with Ruby on Rails it's not dependent on Rails at all, has been used successfully at least in Java and PHP projects and should work with any framework.

- Because of the large user-group coming from Ruby on Rails it has very good community support.

Cons:

- No IE5.x support -- see Prototype -- Cons 1., above.

TIBCO General Interface (AJAX RIA Framework and IDE since 2001)

TIBCO General Interface is a mature AJAX RIA framework that's been at work powering applicaitions at Fortune 100 and US Government organziations since 2001. Infact the framework is so mature, that TIBCO General Interface's visual development tools themselves run in the browser alongside the AJAX RIAs as you create them.

See an amazing demo in Jon Udell's coverage at InfoWorld?. http://weblog.infoworld.com/udell/2005/05/25.html

You can also download the next version of the product and get many sample applications from their developer community site via https://power.tibco.com/app/um/gi/newuser.jsp

Pros:

- Dozens of types of extensible GUI components

- Vector based charting package

- Support for SOAP communication (in adiition to your basic HTTP and XML too)

- Full visual development environment - WYSIWYG GUI layouts - step-through debugging - code completion - visual tools for connecting to services

- An active developer community at http://power.tibco.com/GI

Cons:

- Firefox support is in the works, but not released yet making this still something for enterprises to use where Internet Explorer is on 99.9% of business user desktops, but probably not for use on web sites that face the general population.

More info at http://developer.tibco.com

WebORB

http://www.themidnightcoders.com

License: Standard Edition is free, Professional Edition - commercial license

Description: WebORB is a platform for developing AJAX and Flash-based rich internet applications. The product is available for Java and .NET environments and includes a client side toolkit - Rich Client System to enable binding to server side objects (java, .net, web services, ejb, cold fusion), data paging and interactive messaging.

Pros:

- Zero-changes deployment. Does not require any modifications on the server-side code, no need for custom method attributes, special signatures or argument types. Does not require stub or script generation.

- Available for Java and .NET

- One line API client binding. Same API to bind to any supported server-side type. Generated client-side proxy object have the same methods as the remote counter parts

- Automatically adapts client side method arguments to the appropriate types on the server. Supports all possible argument types and return values (primitives, strings, dates, complex types, collections, data and result sets). Server-side arguments can be interfaces or abstract types

- Includes a highly dynamic message server to allow clients to message each other. Server side code can push data to the connected clients (both AJAX and Flash)

- Supports data paging. Clients can retrieve large data sets in chunks and efficiently page through the results.

- Extensive security. Access to the application code can be restricted on the package/namespace, class or method level.

- Detailed documentation.

- Professional support

Cons:

- Lacks out of the box UI components.

Zimbra

License: Zimbra Ajax Public License ZAPL (derived from Mozilla Public License MPL)

Description: Zimbra is a recently released client/server open source email system. Buried deep within this product is an excellent Ajax Tool Kit component library (AjaxTK?) written in Javascript. A fully featured demo of the product is available on zimbra.com, and showcases the extensive capabilities of their email client. A very large and comprehensive widget library as only avialable in commercial Ajax toolkits is now available to the open source community. Download the entire source tree to find the AJAX directory which includes example applications.

Pros:

- Full support of drag and drop in all widgets. Widgets include data list, wizard, button, text node, rich text editor, tree, menus, etc.

- Build system uses Ant and hosting is based on JSP and Tomcat.

- Very strong client-side MVC architecture based; architect is ex-Javasoft lead developer.

- Communications support for client-side SOAP, and XmlHttpRequest? as well as iframes.

- Support for JSON serialized objects, and Javascript-based XForms.

- Strong muli-browser capabilities: IE 5.5+, Firefox 1.0+, latest Safari

- Hi quality widgets have commercial quaility since this is a commerical open source product.

- Widget library is available as a separate build target set from the main product.

- Debugging facility is built in to library, displays communications request and response.

- License terms making it suitable for inclusion in other commercial products free of charge.

Cons:

- Does not currently support: Keyboard commands in menus, in-place datasheet editing.

- Does not support gracefull degradation to iframes if other transports unavailable.

- Documentation is lacking, but PDF white paper describing widget set and drag and drop is available.

Others

Xajax

Description: PHP-centric, looks very new

License: LGPL

SACK

http://twilightuniverse.com/projects/sack/

Description: New, limited feature set

License: modified X11

Last ten contributors to AjaxLibraries

- FrankZammetti -- Revision 1.23 on date Mon, 12 Dec 2005 06:57:26 GMT

- DidierGirard -- Revision 1.22 on date Mon, 21 Nov 2005 13:34:18 GMT

- MatthewEernisse -- Revision 1.21 on date Tue, 15 Nov 2005 18:56:30 GMT

- EdgarPoce -- Revision 1.20 on date Sat, 05 Nov 2005 15:51:40 GMT

- RichardHundt -- Revision 1.19 on date Fri, 04 Nov 2005 22:08:34 GMT

- JonathanEllis -- Revision 1.18 on date Fri, 28 Oct 2005 03:46:49 GMT

- KevinHakman -- Revision 1.17 on date Wed, 26 Oct 2005 22:23:48 GMT

- AlexDoue -- Revision 1.16 on date Thu, 20 Oct 2005 02:58:38 GMT

- VitaliyShevchuk -- Revision 1.15 on date Wed, 19 Oct 2005 12:54:22 GMT

- JeffPapineau -- Revision 1.14 on date Tue, 18 Oct 2005 07:24:33 GMT

출처 : Tong - 디밥님의 Web 2.0통

Transcript:

Douglas Crockford: Welcome. I’m Doug Crockford of Yahoo! and today we’re going to talk about Ajax performance. It’s a pretty dry topic — it’s sort of a heavy topic — so we’ll ease into it by first talking about a much lighter subject: brain damage. How many of you have seen the film Memento? Brilliant film, highly recommend it. If you haven’t seen it, go get it and watch it on DVD a couple of times. The protagonist is Leonard Shelby, and he had an injury to his head which caused some pretty significant brain damage — particular damage as he is unable to form new memories. So he is constantly having to remind himself what the context of his life is, and so he keeps a large set of notes and photographs and even tattoos, which are constant reminds to him as to what the state of the world is. But it takes a long time for him to sort through that stuff and try to rediscover his current context, and so he’s constantly reintroducing himself to people, starting over, making bad decisions around the people he’s interacting with, because he really doesn’t know what’s going on around him.

If Leonard were a computer, he would be a Web Server. Because Web Servers work pretty much the same way. They have no short term memory which goes from one context to another — they’re constantly starting over, sending stuff back to the database, having to recover every time someone ‘talks’ to them. So having a conversation with such a thing has a lot of inefficiencies built into it. But the inefficiencies we’re going to talk about today are more related to what happens on the browser side of that conversation, rather than on the server.

So the web was intended to be sessionless, because being sessionless is easier to scale. But it turns out, our applications are mainly session full, and so we have to make sessions work on the sessionless technology. We use cookies for pseudosessions, so that we can correlate what would otherwise be unconnected events, and turn them into a stream of events — which would look more like a session. Unfortunately cookies enable cross site scripting or cross site request forge attacks, so it’s not a perfect solution. Also in the model, every action results in a page replacement, which is costly, because pages are heavy, complicated, multi-part things, and assembling one of those, and getting it across the wire can take a lot of time. And that definitely gets in the way of interactivity. So the web is a big step backwards in interactivity — but fortunately we can get most of that interactive potential back.

“When your only tool is a hammer, every problem looks like a webpage.” This is an adage you may have heard before — this is becoming less true because of Ajax. In the Ajax revolution, a page is an application which has a data connection to a server, and is able to update itself over time, avoiding a page replacement. So when the user does something, we send a JSON message to the server, and we can receive a JSON message as the result.

A JSON message is less work for the server to generate, it moves much faster on the wire because it’s smaller, it’s less work for the browser to parse and render than an HTML document. So, a lot of efficiencies are gained here. Here’s a time map of a web application. We start with the server on this side [motions left], the browser on the other [motions right], time running down. The first event is the browser makes a request — probably a URL or a GET Request — going to the server. The server respond with an HTML payload, which might actually be in multiple parts because it’s got images, and scripts, and a lot of other stuff in it. And it’s a much bigger thing than the GET request, so it takes lots of packets. It can take a significant amount of time. And Steve Souders has talked a lot about the things that we can do to reduce this time. The user then looks at the page for a moment, clicks on something, which will cause another request — and in the conventional web architecture, the response is another page. And this is a fairly slow cycle.

Now in Ajax — we start the same, so the page is still the way we deliver the application. But now when the user clicks on something, we’ll generate a request for some JSON data, which is about the same size as the former GET request, but the response is now much smaller. And we can do a lot of these now —so instead of the occasional big page replacement, we can have a lot of these little data replacements, or data updates, happening. So it is significantly more responsive than the old method.

One of the difficult things in designing such an application is getting the division of labour right: how should the application be divided between the browser and the server? This is something most of us don’t have much good experience with, and so that leads us to the pendulum of despair. In the first stage of the swing, everything happened on the server — the server looked on the browser as being a terminal. So all of the work is going on here, and we just saw the inefficiencies that come from that. When Ajax happened, we saw the pendulum swing to the other side — so everything’s happening on the browser now, with the view now that the server is just a file system.

I think the right place is some happy middle — so, we need to seek the middle way. And I think of that middle way as a pleasant dialogue between specialized peers. Neither is responsible for the entire application, but each is very competent at its specialty.

So when we Ajaxify an application, the client and the server are in a dialog, and we make the messages between them be as small as possible — I think that’s one of the key indications if you’re doing your protocol design properly. If your messages tend to be huge because, say, you’re trying to replicate the database, the browser — then I would say you’re not in the middle, you’re still way off to one end. The browser doesn’t need a copy of the database. It just needs, at any moment, just enough information that the user needs to see at that moment. So don’t try to rewrite the entire application in JavaScript — I think that’s not an effective way to use the browser. For one reason: the browser was not intended to do any of this stuff — and as you’ve all experienced, it doesn’t do any of this stuff very well. The browser is a very inefficient application platform, and if your application becomes bloated, performance can become very bad. So you need to try to keep the client programming as light as possible, to get as much of the functionality as you need, without making the application itself overly complex, which is a difficult thing to do.

Amazingly, the browser works, but it doesn’t work very well — it’s a very difficult platform to work with. There are significant security problems, there are significant performance problems. It simply wasn’t designed to be an application delivery system. But it got enough stuff right, perhaps by accident or perhaps by clever design, that we’re able to get a lot of the stuff done with it anyway. But it’s difficult doing Ajax in the browser because Ajax pushes the browser really hard — and as we’ll see, sometimes the browser will push back.

But before thinking about performance, I think absolutely the first thing you have to worry about is correctness. Don’t worry about optimization until you have the application working correctly. If it isn’t right, it doesn’t matter if it’s fast. But that said, test for performance as early as possible — don’t wait until you’re about to ship to decide to test to see if it’s going to be fast or not. You want to get the bad news as early in the development cycle as possible. And test in customer—like configurations — customers will have slow network connections, they’ll have slow computers. Testing on the developmer box on the local network to a developer server is probably not going to be a good test of your application. It’s really easy for the high performance of the local configuration to mask your sensitivity to latency problems.

Donald Knuth of Stanford said that “premature optimization is the root of all evil,” and by that he means ‘don’t start optimizing until you know that you need to.’ So, one place that you obviously know you need optimizing is in the start up — getting the initial page load in. And the work we’ve done here on exceptional performances is absolutely the right stuff to be looking at in order to reduce the latency of the page start up. So don’t optimize until you need to, but find out as early as possible if you do need to. Keep your code clean and correct, because clean code is much easier to optimize than sloppy code. So starting from a correct base, you’ll have a much easier time. Tweaking, I’ve found, for performance, is generally ineffective, and should be avoided. It is not effective, and I’ll show you some examples of that. Sometimes restructuring or redesign is required — that’s something we don’t like to do, because it’s hard and expensive. But sometimes that’s the only thing that works.

So let me give you an example of refactoring. Here’s a simple Fibonacci function. Fibonacci is a function for a value whose function is the sum of the two previous values, and this is a very classic way of writing it. Unfortunately, this recursive definition has some performance problems. So if I ask for the 40th Fibonacci number, it will end up calling itself over 300,000 times — or, 300,000,000 times. No matter how you try to optimize this loop, having to deal with a number that big, you’re not ever going to get it to a satisfactory level of performance. So here I can’t fiddle with the code to make it go faster — I’m going to have to do some major restructuring.

So one approach to restructuring would be to put in a memoizer — similar idea to caching, where I will remember every value that the thing created, and if I ever am asked to produce something for the same parameter, I can return the thing from the look up, rather than having to recompute it. So, here’s a function which does that. I pass it an array of values, and a fundamental function, it will return a function which when called, will look to see if it already knows the answer. And so it will return it, and if not, it will call itself, and pass its shell into that function, so that it can recurs on it. So using that, I can then plug in a simple definition of Fibonacci, here — and when I call Fibonacci on 40, it ends up calling itself 38 times. And it has kind of been doing a little bit more work in those 38 times, than it did on each individual iteration before, but we’ve got a huge reduction in the number — an optimization of about 10 million, which is significant. And that’s much better than you’re ever going to do by tweaking and fiddling. So sometimes you just have to change your algorithm.

So, getting back to the code quality. High quality code is most likely to avoid platform problems, and I recommend the Code Conventions for JavaScript Programming Language, which you can find at http://javascript.crockford.com/code.html. I also highly recommend that you use JSLint.com on all of your code. Your code should be going through without warnings — that will increase the likelihood that it’s going to work on all platforms. It’s not a guarantee, but it is a benefit. And it can catch a large class of errors that are difficult to catch otherwise. Also to improve your code quality, I recommend that you have regular code readings. Don’t wait until you’re about to release to read through the code — I recommend every week, get the whole team sitting at a table, looking at your stuff. It’s a really, really good use of your time. Experienced developers can lead by example, showing the others how stuff’s done. Novice developers can learn very quickly from the group, problems can be discovered early — you don’t have to wait until integration to find out if something’s gone wrong. And best of all — good techniques can be shared early. It’s a really good educational process.

If you finally decide you have to optimize, there are generally two ways you can think about optimizing JavaScript. There’s streamlining, which can be characterized by algorithm replacement, or work avoidance, or code removal. Sometimes we think of productivity in terms of the number of lines of code that we produce in a day, but any day when I can reduce the number of lines of code in my project, I think of that as a good day. The metrics of programming are radically different than any other human activity, where we should be rewarded for doing less, but that’s how programming works. So, these are always good things to do, and you don’t even necessarily need to wait for a performance problem to show up to consider doing these things. The other kind of optimization would be special casing. I don’t like adding special cases, I’ve tried to avoid it as much as possible — they add cruft to the code, they increase code size, increase the number of paths that you need to test. They increase the number of places you need to change when something needs to be updated, they significantly increase the likelihood that you’re going to add errors to the code. They should only be done when it’s proven to be absolutely necessary.

I recommend avoiding unnecessary displays or animation. When Ajax first occurred, we saw a lot of ‘wow’ demonstrations, like ‘wow, I didn’t know a browser could do that’ and you see things chasing around the screen, or chasing, or opening, or doing that stuff [gestures]. There are a lot of project managers, and project flippingss, who look at that stuff not understanding how those applications are supposed to deliver value — they get on that stuff instead, because it’s shiny and… But I recommend avoiding it. ‘Wow’ has a cost, and in particular as we’re looking more at widgeting as the model for application development, as we have more and more widgets, if they’re all spinning around on the screen, consuming resources, they’re going to interfere with each other and degrade the performance of the whole application. So I recommend making every widget as efficient as possible. A ‘wow’ effect is definitely worthwhile if it improves the user’s productivity, or improves the value of the experience to user. If it’s there just to show that we can do that, I think it’s a waste of our time, it’s a waste of the user’s time.

So when you’re looking at what to optimize, only speed up things that take a lot of time. If you speed up the things that don’t take up much time, you’re not going to yield much of an improvement. Here’s a map of some time — we’ve got an application which we can structure, the time spent on this application into four major pieces. If we work really hard and optimize the C code [gestures to shortest block] so that it’s using only half the time that it is now, the result is not going to be significant. Whereas if we could invest less but get a 10% improvement on the A code [gestures to longest block] that’s going to have a much bigger impact. So it doesn’t do any good to optimize that [gesture to block C], this is the big opportunity, this is the one to look at [gestures to block A].

Now, it turns out, in the browser, there’s a really big disparity in the amount of time that JavaScript itself takes. If JavaScript were infinitely fast, most pages would run at about the same speed — I’ll show you the evidence of that in a moment. The bottleneck tends to be the DOM interface — DOM is a really inefficient API. There’s a significant cost every time you touch the DOM, and each touch can result in a reflow computation, which can be very expensive. So touch the DOM lightly, if you can. It’s faster to manipulate new nodes before they are attached to the tree — once they’re attached to the tree, any manipulating of those nodes can result in another repaint. Touching unattached nodes avoids a lot of the reflow cost. Setting innerHTML does an enormous amount of work, but the browsers are really good at that — that’s basically what browsers are optimized to do, is parse HTML and turn it into trees. And it only touches the DOM once, from the JavaScript perspective, so even though it appears to be pretty inefficient, it’s actually quite efficient, comparatively. I recommend you make good use of Ajax libraries, particularly YUI — I’m a big fan of that. Effective coding reuse will make the widgets more effective.

So, this is how IE8 spends its time [diagram is displayed]. This was reported by the Microsoft team at the Velocity conference this year. So, this is the average time allocation of pages of the top 100 allexalwebpages. So they’re spending about 43% of their time doing layout, 27% of their time doing rendering, less than 3% of their time parsing HTML — again, that’s something browsers are really good at. Spending 7% of their time marshalling — that’s a cost peculiar to IE because it uses ActiveX to implement its components, and so there’s a heavy cost just for communicating between the DOM and JavaScript. 5% overhead, just messing with the DOM. CSS, formatting, a little over 8%. Jscript, about 3%. OK, so if you were really heroic, and could get your Jscript down to be really, really fast, most users are not even going to notice. It’s just a waste of time to be trying to optimize a piece of code that has that small an impact on the whole. What you need to think about is not how fast the individual JavaScript instructions are doing, but what the impact of those instructions have on the layout and rendering. So, this is the case for most pages on average — let’s look at a page which does appear to be compute bound.

This is the time spent for opening a thread on GMail, which is something that can take a noticeable amount of time. But if we look at the Jscript component, it’s still under 15%. So if we could get that to go infinitely fast, it still wouldn’t make that much of a difference. In this case, it turns out CSS formatting is chewing up most of the time. So if I were in the GMail team, running up this, I could be beating up my programmers saying ‘why is your code going so slow?’ or I could say ‘let’s schedule a meeting with Microsoft, and find out what we can do to reduce the impact of CSS expenditures in doing hovers, and other sorts of transitory effects,’ because that’s where all the time is going. So, that’s not a big deal. So you need to be aware of that when you start thinking about: ‘how am I going to make my application go faster?’

Now, there are some things which most language processors will do for you. Most compilers will remove common sub expressions, will remove loop invariants. But JavaScript doesn’t do that. The reason is that JavaScript was intended to be a very light, little, fast thing, and the time to do those optimizations could take significantly more time than the program itself was actually going to take. So it was considered not to be a good investment. But now we’re sending megabytes of JavaScript, and it’s a different model, but the compilers still don’t optimize — it’s not clear that they ever will. So there’s some optimizations it may make sense to make by hand. They’re not really justified in terms of performances, we’ve seen, but I think in some cases they actually make the code read a little better. So let me show you an example.

Here I’ve got a four loop, which I’m going to go through, a set of divs, and for each of the divs I’m going to change their styling — I’m going to change the color, and the border, and the background color. Not an uncommon thing to do in an Ajax application, but I can see some inefficiencies in this. One is, I have to compute the length of the array of divs on every iteration, because of the silly way that the fore statement works. I could factor that out — it’s probably not going to be a significant savings, but I could do that a bigger cost is, I’m computing divs by style on every itiration period. So I’m doing that three times per iteration — when I only have to do it once. And probably the biggest, depending on how big the length turns out to be, is that I’m computing the thickness parameter value on every iteration, and it’s constant, in the loop. So every time I compute that, except the first time, that’s a waste. I compute this loop this way. I create a border, which’ll precreate the thickness. Also, capture the number of divs. Then in the loop, I will also capture divs by style, so we’ll only have to get it once, and now I can change these things through that. To my eye, this actually read a little bit better — I can see border, yeah, this makes sense. I can see what I’m doing here. Do any of these changes affect the actual performance of the program? Probably not measurably, unless ‘n’ is really, really big. But I think it did make the code a little bit better and so I think this is a reasonable thing to do.

I’ve seen people do experiments where there are two ways you could say: a cat can eat a string. You need to use a plus operator, or you could do a ‘join’ on an array. And someone heard that ‘join’ is always faster, and so you see them doing cases where you concatenate two things together, they’ll make an array of two things and call ‘join’. This is strictly slower, this case, than that one. ‘Join’ is a big win if you’re concatenating a lot of stuff together. For example, if you’re doing an innerHTML build in which you’re going to build up, basically, a subdocument, and you’ve got a hundred strings that are going to get put together in order to construct that — in that case, ‘join’ is a big win. Because every time you call ‘+’, it’s going to have to compute the intermediate result of that concatenation, so it consumes more and more memory, and copies more and more memory as it’s approaching the solution. ‘Join’ avoids that. So in that case, ‘join’ is a lot faster. But if you’re just talking about two or three pieces being put together, ‘join’ is not good. So generally, the thing that reads the best will probably work, but there are some special cases where something like ‘join’ will be more effective. But again, it’s generally effective when ‘n’ is large — for small ‘n’, it doesn’t matter.

I recommend that you don’t tune for quirks. There are some operations which, in some browsers, are surprisingly slow. But I recommend that you try to avoid that as much as possible. There might be a trick that you can do which is faster on Browser A, but it might actually be slower on Browser B, and we really want to run everywhere. And the performance characteristics of the next generation of browsers may be significantly different than this one — if we prematurely optimize, we’ll be making things worse for us further on. So I recommend avoiding short term optimizations.

I also recommend that you not optimize without measuring. Our intuitions as to where our programs are spending time are usually wrong. One way you can get some data is to use dates to create timestamps between pieces of code — but even this is pretty unreliable. A single trial can be off by as much as 15ms, which is huge compared to the time most JavaScript statements are going to be running, so that’s a lot of noise. And even with accurate measurement, that can still lead to wrong conclusions. So measurement is something that has to be done with a lot of care. And evaluating the results also requires a lot of care.

[Audience member raises hand]

Douglas: Yeah? Oh, am I aware of any tools or instruments the JavaScript engine in the browsers so that you can get better statistics on this stuff? I’m not yet, but I’m aware of some stuff which is coming — new firebug plug—ins and things like that, which will give us a better indication as to where the time’s going. But currently, the state of tools is shockingly awful.

So, what do I mean when I talk about ‘n’? ‘N’ is the number of times that we do something. It’s often related to the number of data items that are being operated on. If an operation is performed only once, it’s not worth optimizing — but it turns out, if you look inside of it you may find that there’s something that’s happening more often. So the analysis of applications is strongly related to the analysis algorithms, and some of the tools and models that we can look at there can help us here.

So suppose we were working on an array of things. The amount of time that we spend doing that can be mapped according to the length of the array. So, there’s some amount of start up time, which is the time to set up the loop and perhaps to turn down the loop, on the fixed overhead of that operation. And then we have a line which descends as the number of pieces of data increases. And the slope of that line is determined by the efficiency of the code, or the amount of work that that code has to do. So a really slow loop will have a line like that [gestures vertically] and a really fast loop will have a line that’s almost horizontal.

So you can think of optimization as trying to change the slope of the line. Now, the reason you want to control the slope of the line is because of the axis of error. If we cross the inefficiency line, then a lot of the interactive benefits that we were hoping to get by making the user more productive, will be lost. They’ll be knocked out of flow, and they’ll actually start getting irritated — they might not even know why, but they’ll be irritated at these periods of waiting that are being imposed on them. They might not even notice, they’ll just get cranky using your application, which sets you up for some support phone calls and other problems — so you don’t want to be doing that. But even worse is, if you cross the frustration line. At this point, they become… they know that they’re being kept waiting, and it’s irritating, so satisfaction goes way down. But even worse than that is the failure line. This is the line where the browser puts up an alert, saying ‘do you want to kill the script?’ or maybe the browser locks up, or maybe they just get tired of waiting and they close the thing. This is a disaster, this is total failure. So you don’t want to do that either, so you want to not cross any of those lines, if you can avoid it — and you certainly never want to cross that one [gestures to failure line].

So to do that, that’s when you start thinking about optimizing: we’re crossing the line, we need to get down. One way we can think about it is changing the shape of the line. Sometimes if you change the algorithm, you might increase the overhead time of the loop — but in exchange for that, you can change the slope. And so, in many cases, getting a different algorithm can have a very good effect. Now, if the kind of data you’re dealing with has interactions in it, then you may be looking at an ‘n log n’ curve. In this case, changing the shape of the line won’t have much of a help. Or if it’s an n squared line, which is even worse — doubling the efficiency of this [gestures to point on line] only moves it slightly. So the number of cases that you can handle before you fail is only marginally increased by an heroic attempt to optimize — so that’s not a good use of your time.

So the most effective way to make programs faster is to make ‘n’ smaller. And Ajax is really good for that, Ajax allows for just—in—time data delivery — so we don’t need to show the user everything they’re ever going to want to see, we just need to have on hand what they need to see right now. And when they need to get more, we can get more in there really fast, by having lots of short little packets in this dialog.

One final warning is: The Wall. The Wall is the thing in the browser that when you hit it, it hurts really bad. We saw a problem in [Yahoo!] Photos, back when there was [Yahoo!] Photos [which was replaced by Flickr], where they were trying to do some…they had a light table, on which you could have a hundred pictures on screen. And then you could go to the next view, which would be another hundred pictures, and so on. And thinking they wanted to make it faster to go back and forth, they would cache all the structures from the previous view. But what they found was the next view — or, the first view, came up really fast, but the second view took a half second more, the next view took a second more, and then the next view a second and a half more, and so on. And pretty soon you reached the failure line. And it turned out that what they had done — intending to be optimizing, was actually slowing everything down. That the working set the browser was using, the memory it was using in order to keep all those previous views, which were being saved as full HTML fragments, was huge. And so the system was spending all of its time doing memory management, and there was very little time left for actually running the application. So the solution was to stop trying to optimize prematurely, and just try to make the basic process of loading one of these views as fast as possible. So the only thing that cached was the JSON data, which was pretty small, and they would rebuild everything every time — which didn’t take all that much time, because browsers are really good at that. And in doing that, they managed to get the performance down to a linear place again, where every view took the same amount of time.

These problems will always exist in browsers, probably. So the thing we need to be prepared to do is to back off, to rethink our applications, to rethink our approach. Because while the limits are not well understood, and not well advertised, they are definitely out there. And when you hit them, your application will fail, and you don’t want to do that. So you need to be prepared to rethink not only how you build the application, but even what the application needs to do — can it even work in browsers. For the most part, it appears to be ‘yes’, but you need to think responsively, think conversationally. The gaps don’t work very well in this device… So, that’s all I have to say about performance. Thank you.

The question was, did they have a way of flushing what they were keeping for each view? And it turned out they did. What they did was, they built a subtree in the DOM for each view of a hundred images, a hundred tiles, and they would disconnect it from the DOM and then build another one, and keep a pointer to it, so they could reclaim it. And then they’d put that one in there. And so they had this huge set of DOM fragments. And that was the thing that was slowing them down. Just having to manage that much stuff was a huge inefficiency for the browser. And they did that thinking they were optimizing — so that when you hit the back button, boom — everything’s there, and they can go back really fast. And then if you want to go forward again, you can go really fast. So they imagined they wanted to do that, and do that really effectively, but it turned out trying to enable that prevented that from working. So a lot of the things that happen in the browser are counterintuitive. So generally, the simplest approach is probably going to be the most effective.

That’s right, you don’t know what’s going to take the time — and so it becomes a difficult problem. You shouldn’t optimize until you know you need to. You need to optimize early, but you won’t be able to know until the application is substantially done. So, it’s hard.

Audience member: So it’ a matter of stay open to…

Douglas: That, and trying to stay in [...]. If you can keep testing constantly throughout the process, not wait until the end for everything to come together, that’s probably going to give you the best hope of having a chance to correct and identify performance problems.

Right, so the question is where to do paginations, essentially. Or caching. So, once approach is to have the server do the paginations, so it sends out one chunk at a time — and most websites work that way, because that’s the most responsive way to deliver the data. I can deliver 100 lines faster than I can deliver 1,000 lines, and so users like that — even though it means that they’re going to have to click some buttons to see the other 90% of it, people have learned to do that. The other approach is, take the entire data set and send that to the browser, and let the browser partition it out. And that has pretty much the same performance problems as the other approach, where we send all 1,000 in one page. What I recommend is doing it in chunks, and each chunk can be a pair of JSON messages, and request the chunks as you need them. That appears to be the fastest way the present the stuff to the user. Now, it’s harder to program that way — it’s easier for us if it’s one complete data set, and then we go ‘oh, we’ll just take what we need from that’. So that’s easy for us, but it does not give us the best performance. And as the data set gets bigger, it’s certain to fail.

Right. So, can we use canvass and CVS tricks to do well, is that a more effective way to accomplish that? Probably, at least in the few browsers that implement them currently. Over time, the browsers are promising to take better advantage of the graphics hardware, which standard equipment now on virtually all PCs — so it’s unlikely that we’ll be able to get that performance boost directly from JavaScript. So it’s likely that it’s going to be on the browser side, and those sorts of components. So eventually, that’s probably going to be a good approach — although it’s not clear how well that stuff’s going to play out in the mobile devices, and that’s becoming important too, so everything’s probably going to get harder and more complicated. That’s the forecast for 2009.

BN59-00992A-01Kor.pdf

BN59-00992A-01Kor.pdf

apa2000_v1.2.24.upload

apa2000_v1.2.24.upload iPodResetUtilitySetup.exe

iPodResetUtilitySetup.exe

proxy.UltraSurf.u95.zip

proxy.UltraSurf.u95.zip